🤖 Powering the Next Generation of Smart Robots: Introducing Gemini Robotics 1.5

9/27/20252 min read

This is an exciting announcement about the progress in AI and robotics!

Here is the user-friendly, online version of your text, broken down for clarity and easier reading:

🤖 Powering the Next Generation of Smart Robots: Introducing Gemini Robotics 1.5

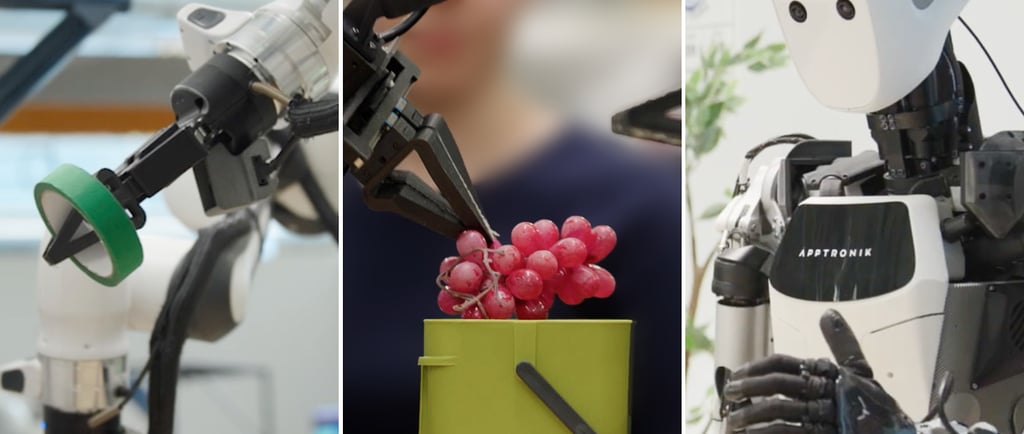

We are entering a new era of Physical Agents—robots that can perceive, plan, think, use tools, and act to solve complex, real-world tasks.

Building on the success of the original Gemini Robotics models, we're taking a major leap forward to create truly general-purpose intelligent robots.

🌟 Two New Models for Advanced Robotic Intelligence

We're introducing two powerful new models that unlock advanced "agentic" (self-planning) experiences for physical tasks:

Model NameCore CapabilityKey FeaturesGemini Robotics 1.5Vision-Language-Action (VLA): The robot's motor control and executor.Thinks Before Acting: Transparently shows its thought process before performing a step. Learns Across Robots: Skills learned on one robot can be quickly applied to others.Gemini Robotics-ER 1.5Vision-Language Model (VLM) for Embodied Reasoning (ER): The robot's high-level brain and planner.Advanced Planning: Creates detailed, multi-step plans for complex missions. Uses Tools: Can natively use digital tools like Google Search and other third-party functions. State-of-the-Art Spatial Understanding.

Export to Sheets

🧠 How the Models Work Together

These models work as an Agentic Framework to solve tough, multi-step problems:

High-Level Brain (Gemini Robotics-ER 1.5): Acts as the orchestrator.

It receives a complex task (e.g., "Sort these objects into the correct bins based on my local rules.").

It reasons about the physical world, uses tools (like searching the web for local recycling guidelines), and creates a detailed, step-by-step plan.

It then gives the plan as simple, natural language instructions to the action model.

Action & Execution (Gemini Robotics 1.5):

It takes the natural language instruction for a single step.

It uses its vision to understand the environment and executes the required motor commands.

It can even explain its thinking process for greater transparency.

🚀 What This Means for Developers

These advances will help developers build robots that are:

More Capable: Can handle complex, multi-step instructions.

More Versatile: Can adapt and understand their environment to solve general tasks.

GET STARTED TODAY

Gemini Robotics-ER 1.5 is available now to developers via the Gemini API in Google AI Studio.

Gemini Robotics 1.5 is currently available to select partners.

👉 Read more about building with the next generation of physical agents on the Developer Blog!